Five years from now, every serious software company will have an AI backend.

Not a chatbot. Not a copilot. Not a drag-and-drop workflow.

A reasoning layer that sits alongside their services, making decisions that used to be hardcoded, handling complexity that used to be impossible.

When websites became applications, we separated frontend from backend. When data outgrew the app database, we built data lakes and pipelines.

Something similar is starting now, but most people don't see it yet. They're watching chatbots.

Beyond the Chatbot

The current conversation around AI in software is too narrow, focused on the surface, a chat widget in the corner, a copilot suggesting code, an assistant that automates what a user would have done manually. These are real, and they have value, but they miss the larger shift.

Software keeps adding layers when complexity demands it. The frontend/backend split gave us separation of concerns. Data engineering gave us pipelines and lakes when analytics outgrew the app database. Each shift created new infrastructure for problems that didn't fit anywhere else.

There's a layer missing.

A reasoning layer. One that doesn't replace your services but augments them. It sits alongside your order service, your payment service, your notification service. At every decision point where you currently have hardcoded rules or brittle if-else chains, the reasoning layer provides intelligence that adapts to context.

Your backend becomes software that can think. Decisions that were static become dynamic, hardcoded logic becomes adaptive, and what was impossible to encode becomes tractable.

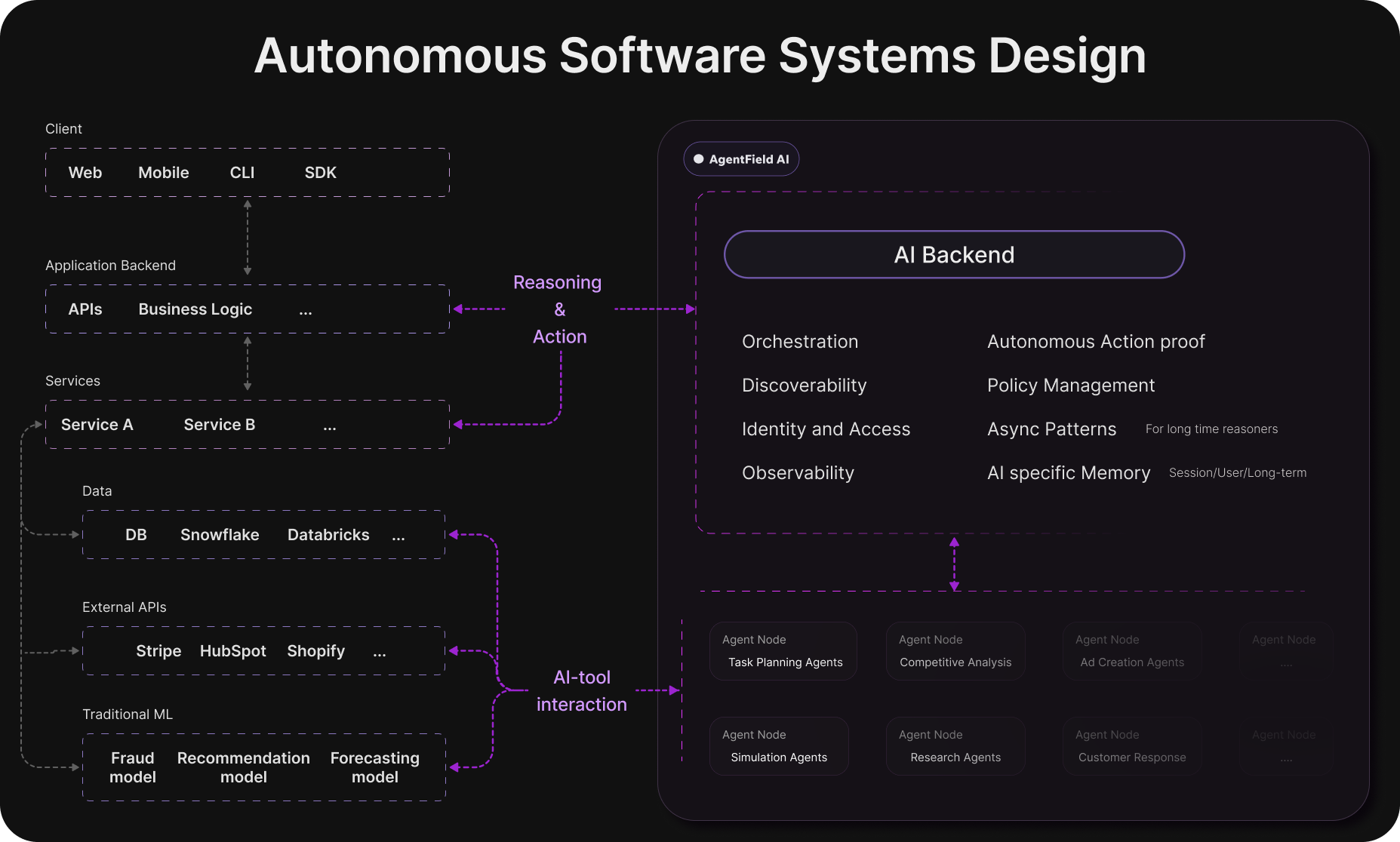

An AI backend isn't a feature you bolt onto your product but a layer you add to your architecture.

Autonomous Software Systems Design

Two Dead Ends

Two approaches dominate right now, and both fail.

The DAG trap. People treat AI like data pipelines: fixed steps, explicit flows, one node feeding the next. DAG stands for directed acyclic graph, a structure borrowed from ML training where data flows one way through a predetermined sequence. This made sense for batch predictions, but reasoning doesn't work this way. It needs to adapt, reconsider, loop back.

Force reasoning into a DAG and you've just built a more expensive rules engine. No one builds a payment system by drawing the entire API call graph upfront and executing it as a rigid pipeline; you build composable services. Yet that's exactly what most agent frameworks demand: rigid graphs, not composable intelligence.

The autonomous agent fantasy. The opposite extreme: one orchestrator with access to every tool, deciding on the fly. The pattern spreading fastest right now, it demos beautifully but fails catastrophically in production. You can't run a backend you can't predict, and you can't operate a system you can't audit. Complete autonomy isn't a feature but a liability.

There's a third way: something that reasons freely within boundaries you define. We call this guided autonomy.

What This Looks Like

To see guided autonomy in practice, consider three domains where the same pattern emerges: existing services gain contextual intelligence.

E-commerce order flow. In a traditional backend, an order triggers a fixed sequence: check inventory, charge payment, assign fulfillment, send notification. Each step follows predetermined rules. With a reasoning layer, every decision point becomes contextual. Which warehouse should handle this order? That depends on the customer's location, their history, current inventory levels, delivery promises, and a dozen signals that are hard to encode as rules. The right retry strategy when a payment fails? The answer shifts based on customer value, fraud signals, and the cost of losing this transaction. Notification timing adapts to communication preferences and urgency.

But here's what really changes - what no rule system could do: the system can negotiate. It trades off delivery speed against capacity in real-time, creating personalized commitments that no rule could encode. It reasons about what matters to this customer right now.

The services stay the same, the flow stays the same, but brittle decisions become intelligent ones, and capabilities that were impossible become routine.

SaaS subscription backend. Billing, usage tracking, and churn prevention are full of decisions currently handled by static thresholds. With a reasoning layer, the system observes usage patterns and reasons about when to suggest an upgrade. A failed payment doesn't trigger a one-size-fits-all retry schedule; the system reasons about what strategy fits this customer. Declining engagement prompts not a generic email campaign but a reasoned intervention tailored to this account.

The system maintains actual relationships, not logs, it has memory. It knows this customer struggled with onboarding six months ago, knows they're in a regulated industry, knows their champion just got promoted. When it decides to reach out, that's not a trigger firing but judgment.

Marketplace platforms. Search ranking, trust scoring, and dispute resolution currently rely on algorithms and rules that struggle with edge cases. A reasoning layer adapts ranking to buyer context in real-time, synthesizes trust signals holistically rather than applying rigid formulas, and handles disputes with contextual reasoning.

The system mediates disputes by synthesizing everything, transaction history, message tone, policy intent, platform norms, and reaches resolutions that feel fair to both parties. Not a decision tree or an escalation queue but actual judgment, running at thousands of disputes per hour.

The pattern holds across all of these: same services, same infrastructure. What changes is that rules become policies and static logic becomes reasoning.

Why Now

Two shifts made this architectural change possible.

First, large language models crossed a capability threshold. For decades, machine learning meant prediction - given inputs, produce an output. Useful, but limited. LLMs introduced something different: they reason. They weigh tradeoffs, interpret context, and follow nuanced instructions, and this capability applies not just to generating text but to making decisions.

Second, the infrastructure patterns are emerging. Just as Kubernetes didn't appear overnight but emerged from years of distributed systems learning, the patterns for running reasoning at scale inside backends are starting to crystallize. How do you deploy, test, and observe reasoning logic in production? How do you enforce policies when reasoning is part of the stack? These questions are getting answers.

What's emerging isn't a new type of application but a new capability that existing applications can adopt. Your backend doesn't become an AI product; it becomes autonomous software that reasons within boundaries you define.

The reasoning layer operates within policies, constraints, and objectives you specify, in a way that can always be audited. Autonomous in the same way a good employee is autonomous: capable of judgment but working within a framework of expectations. Predictable enough to trust, flexible enough to be useful.

What Comes Next

The infrastructure for AI backends is in its early stages. Most teams trying to add intelligence to their backends today are doing it ad hoc: a few LLM calls here, some prompt engineering there, no coherent abstraction or operational model.

The infrastructure for this exists. AgentField is the layer that makes autonomous software production-ready.

Scale. Building production AI backends means solving problems that have nothing to do with intelligence: durable queues, async execution, backpressure, retries, health checks, service discovery. AgentField handles all of it so you can focus on the reasoning while we handle everything needed to run it at scale. What works on your laptop deploys to Kubernetes without rewrites.

Trust. In traditional backends, inter-service security and audit trails matter but rarely make or break the system. Services execute deterministic code. When software makes autonomous decisions, the stakes change: Who authorized this agent? What were its inputs? Can you prove it to an auditor? Every AgentField agent gets a cryptographic identity (we unpack the architecture in IAM for AI Backends blog post), and every decision is signed, creating tamper-proof records. The guided autonomy we described becomes enforceable infrastructure.

Think Kubernetes for orchestration. Okta for identity. Purpose-built for software that reasons.

AgentField is open source.